Content is king. Not only does it help you generate traction for your website, but it also fuels your SEO, making it easier for you to climb the search engine rankings faster than your competitors.

However, content creation is an intricate process that requires a significant amount of time and energy.

Thanks to technological advancements, we now have access to solutions that can help speed up the process, such as content-creating tools fueled by AI.

AI has come a long way. With all the recent developments, content tools powered by AI have proven to be great facilitators of the content creation process.

However, there’s a flip side to everything, and these tools are no exception. There are a variety of benefits associated with their use, but one can’t deny the unseen problems that come along with them.

AI sure has made content creation easier than ever before, but at the same time, it has made websites vulnerable to content duplication or scraping.

This poses a great threat, as Google has some really strict policies to deal with duplicate content concerns and often resorts to strict measures.

Therefore, it’s essential that you know how to protect your website content from the rise of AI in order to ensure your SEO performance and minimize the likelihood of being penalized by search engines.

Table of Contents

How AI Leverages Your Website Content

Before we dive in and explore how you can protect your website content from the rise of AI, it’s important to know what makes your website vulnerable in the first place, especially in the context of SEO performance and rankings.

It’s important for you to understand how AI leverages your website content to facilitate other users of AI-powered tools. So you can take effective measures in response to it.

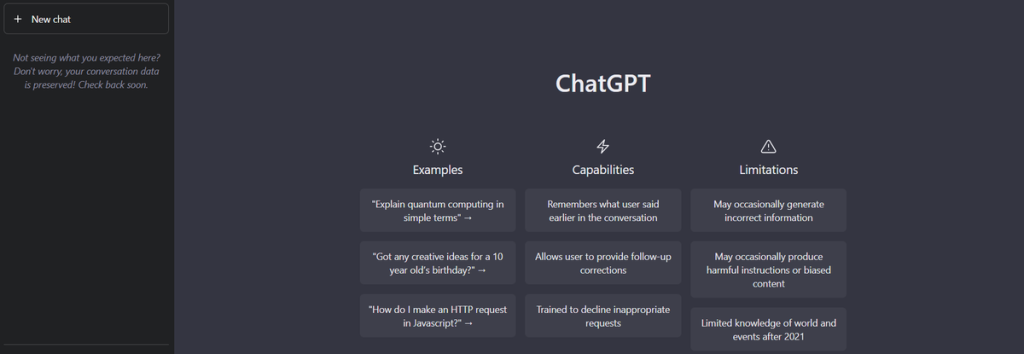

Let’s take ChatGPT as an example here. It’s an amazing AI-powered content-creating tool with the ability to generate content on any topic provided by its users.

It’s a large language model that’s trained on an enormous amount of data to process the users’ prompts and generate an optimal response.

To come up with an ideal response for the prompt provided, ChatGPT crawls the internet for relevant sources of information such as websites, books, articles, knowledge bases, etc. The information gathered is then processed by AI to provide users with a human-like response.

The large language models, or LLMs, develop an understanding of different topics as per the information gathered and have the ability to refine the responses over a period of time.

This makes AI-powered tools capable of learning continuously as per their training data and the patterns observed. The more data these models are exposed to, the better they get at generating responses.

Plus, you can actually command the LLMs to gather data from specific sources of information to generate content for you.

For example, a user can actually mention your website as a source of information and ask the tool to mimic the writing style of one of the most competent writers on your team. And that’d be just scratching the surface.

LLMs have the capability of crawling your website and learning from everything that you publish on it. They even learn from the comments posted on your website by others and use that information to refine their responses.

So, it goes without saying that AI has the potential to redefine the content creation process as you know it. But at the same time, it exposes your website to several vulnerabilities and may cause more harm than good if you’re not careful.

Issues Caused by AI and How to Overcome Them

As said earlier, AI-powered content-creating tools have the potential to crawl your website and learn from anything that you publish on it.

Whether it’s text or visual content, anything that’s published on your website can be used for training LLMs. And that can cause multiple issues for the website owners.

When someone commands LLMs to use your website as a source of information and take inspiration from your writing style, it may lead to issues like content duplication, which can severely hinder your website’s growth.

Content duplication affects the search engine’s capability to display the best-suited results in response to the queries that people use to find relevant information.

And that’s the reason why Google, a search engine giant with over 93% of the market share in the industry, doesn’t like it when similar or identical information is accessible through multiple sources on the web.

Content duplication can severely affect the search engine rankings of a website, even if it’s the originator of the information.

Therefore, it is important that you take appropriate measures to protect your website from large language models and prevent them from leveraging your website’s content to generate responses.

The following are a few things that may help protect your website content from the rise of AI:

5 Steps to Prevent LLMs from Crawling Your Website

Large language models, also known as LLMs, crawl your website to access the information published on it. So, one of the best ways to deal with the situation is to restrict their access.

This can be done by preventing the web crawlers or spiders used by AI-powered tools from discovering your web pages and disallowing their access to view your content.

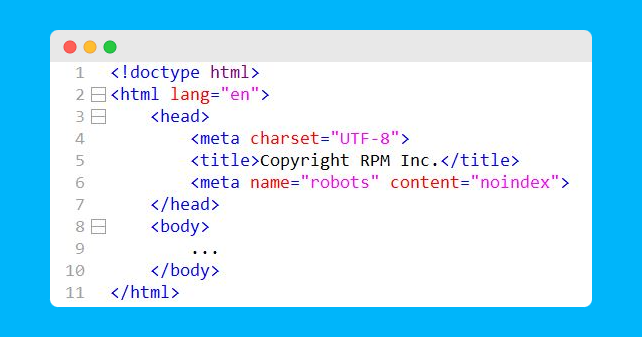

And you can do the needful by maneuvering the robots.txt file, as the file allows website owners to decide which parts of their website are to be crawled by web bots.

You can go completely incognito or handpick specific pages to be available for web crawlers. And that can help protect your content from LLMs.

Furthermore, you can restrict specific types of bots from crawling your pages and accessing your content, such as Common Crawl bots that explore the internet and make the information available to users for free.

Take ChatGPT as an example. The AI-powered solution leverages a variety of datasets, encompassing Books1, Books2, Wikipedia, Common Crawl, and so on. The tool can also be trained on datasets created by human trainers to refine its responses and generate an appropriate output.

So, you should consider blocking all aforesaid bots and allowing only the necessary search engine crawlers to access your website’s content using the robots.txt file.

1. Using Noindex Meta Tags

You can protect your website from AI-powered content-creating tools by using noindex meta tags. These tags are added to the HTML code of your web pages and prevent LLMs from crawling those specific pages of your website.

Indexing is the process that makes your pages accessible to web crawlers used by search engines to help them determine the relevance of your content and assign rankings accordingly.

By using noindex tags, you are preventing web crawlers from accessing the information available on your respective pages and adding it to the database. This protects your website content and prevents its use for the training of large language models.

However, you have to be extra careful if you choose to go with this method. You would not want to make your website inaccessible to search engine crawlers, as it may have a tremendous impact on your rankings.

Plus, if you’re blocking certain web crawlers from accessing your pages through the robots.txt file method, there is no need for you to use noindex meta tags.

So, either you go with the first method listed in this article or this one. It’s solely a matter of preference and what you perceive as a more effective method.

2. Requiring Authorization for Content Access

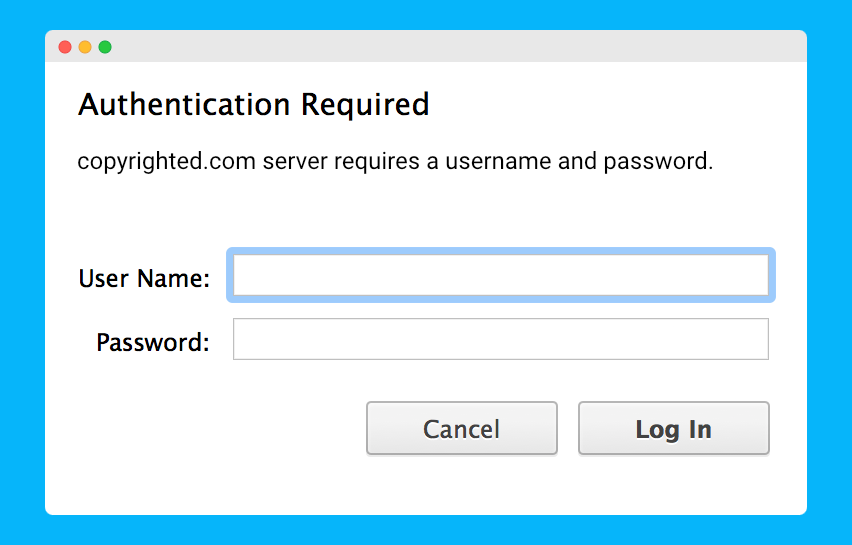

If you are worried about your website content being accessed and used by AI-powered tools, you can consider restricting public access.

This means allowing only certain users with the provided credentials to access the content published on your website.

By doing this, you would effectively block web crawlers from accessing your content and ensure the uniqueness of the information you publish.

However, there’s a downside to implementing this method, as it can severely hamper your website’s growth.

By allowing only authorized visitors to access your content, you kind of give up on the opportunity to cast a wider net and attract a relevant audience. Consider it a price to pay for keeping your data confidential.

So, this may not be a viable approach to consider for websites that have just gotten started or require their content to be accessed by the general public to keep the needle moving.

3. Using Gated Content

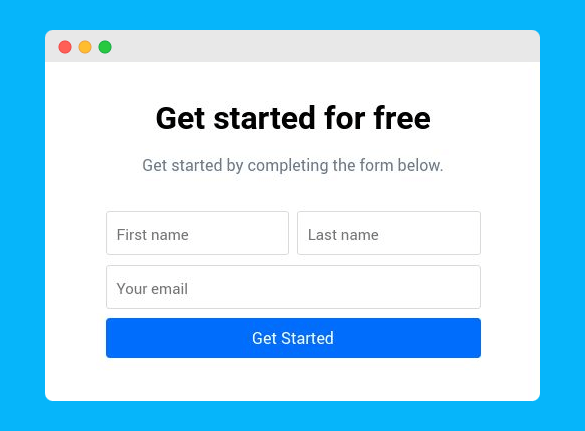

This method is a watered-down version of the one mentioned above. Here, the content published on your website is still available for public access, but you need visitors to provide you with certain information to view it.

For example, you may ask your visitors to provide you with their names, email addresses, and contact information to view the content published on your website.

When you use a form to gate the content published on your website, it restricts the bots from crawling that particular post or page and minimizes the likelihood of your content being used by large language models or AI-powered tools.

The addition of an extra step to access the required information through your website may affect the user experience of your visitors. However, it’s a small compromise compared to the benefits associated with this measure.

4. Adding CAPTCHA

CAPTCHA stands for Completely Automated Public Turing Test to Tell Computers and Humans Apart. It’s a computer program that protects websites from content-scraping bots and automated information extraction by distinguishing human input from that of a machine.

So, implementing it on your website qualifies as an effective method that protects your content from the rise of AI.

Just like the gated content approach, implementing CAPTCHA would add an additional step for your visitors to complete in order to access the required content.

The system may ask your visitors to complete an easy-to-solve puzzle or perform a certain action, like entering the text displayed in the form of an image.

If you think that the gated content method would not be best suited as your visitors may not be comfortable sharing their personal information, then adding a CAPTCHA to your site is the way to go.

It prevents AI-powered tools from accessing your content and helps you deal with content duplication issues.

However, just like other methods similar to this one, adding a CAPTCHA to your website may also affect the user experience. So, a little drop in returning visitors is to be expected.

5. Leverage Copyright Laws

You can protect your website content from AI-powered tools through copyright protection. This method requires you to leverage copyright laws that act as a safeguard against duplicate or plagiarized content.

All it requires is for you to add a disclosure or copyright notice to each of your pages, clearly stating legal terms or policies. This acts as a warning to others who may intend to use your content without your permission.

Upon detecting an infringement, you can take advantage of the Digital Millenium Copyright Act (DMCA) and get the content removed that’s been published without your consent.

All it takes for you is to send a takedown notice to the publisher that uses your content, and they’ll be legally bound to remove it. And if they don’t comply with your request for some reason, you have the right to take them to court, where the decision would likely go in your favor.

So, even if the information published on your website is used for training large language models, you can minimize the likelihood of content scraping or duplication by copyrighting your website.

Final Words

Around 82% of businesses use content marketing to attract and connect with a relevant audience. However, creating relevant content at a consistent pace is not that easy.

This is one of the major reasons why many have started leveraging AI-powered tools to ease the content creation process. And that has made websites vulnerable to content duplication and scraping concerns.

This article helps you explore the causes of the problem and generates awareness on how to protect your website content from the rise of AI, making it easier for you to secure your intellectual property.

So, if you’ve been grappling with such concerns and looking for viable solutions, the methods recommended in this article may come in handy.